Insightful Data Visualizations You Need to See — DataViz Weekly

Updated: 2025-03-07 09:42:25

Data visualization is powerful — when done right, it conveys meaning clearly and helps insights emerge naturally. We continue DataViz Weekly, where we regularly highlight some excellent examples from around the web, offering a firsthand look at how graphical representation can effectively reveal and clarify information. This time, we think you just need to see […]

The post Insightful Data Visualizations You Need to See — DataViz Weekly appeared first on AnyChart News.

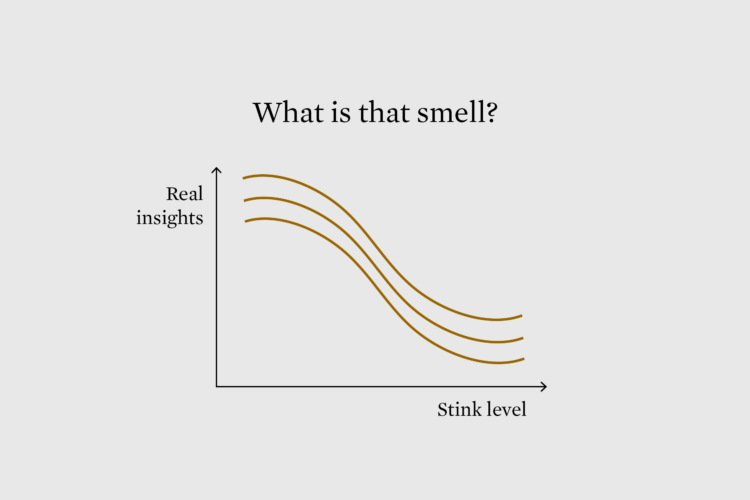

The first part of visualizing data usefully is making sure the data you're working with is not terrible.Tags: expectations, formatting, missing data, outlier

The first part of visualizing data usefully is making sure the data you're working with is not terrible.Tags: expectations, formatting, missing data, outlier